How Does Neural Machine Translation Work?

If you’ve ever taken a foreign language class long enough to learn how to form basic sentences, you know that translation is vastly more complicated than getting a dictionary, looking up each word in the source sentence, translating it and then ending up with a sentence in another language that makes sense. Because language is…

Subscribe to our newsletter

Share

If you’ve ever taken a foreign language class long enough to learn how to form basic sentences, you know that translation is vastly more complicated than getting a dictionary, looking up each word in the source sentence, translating it and then ending up with a sentence in another language that makes sense. Because language is so complicated, accurate translation can’t be approached by substituting each word in the source sentence for a literal, word-level translation in the target sentence. Context, nuance, “long-distance” relationships between words and other factors come into play in translation making machine translation one of the biggest challenges for artificial intelligence (AI).

Thankfully, neural machine translation (NMT) is significantly changing and improving multilingual communication.

Where We’ve Been: Statistical Machine Translation

When machine translation engines were first developed, linguists worked with developers to create rules around a single language. Then, the developers would train the machine translation engine based on these rules. However, as you can imagine, creating these rules one by one was tedious and not always accurate (the world is home to 6,500 languages).

Statistical machine translation (SMT) attempted to help alleviate the hurdles of expertise and time by using statistical models. SMT starts with a very large “corpus” or body of high-quality human translations. This corpus is then used to deduce a statistical model of translation. This model is then applied to untranslated target texts to make a probability-driven match to suggest a reasonable translation. The model is based upon the frequency of phrases appearing in the training corpus which are loaded into a table. This table stores each phrase and the number of times the phrase repeats over the corpus.

The more frequently a phrase is repeated in a training corpus, the more probable the target translation is correct. Each phrase ranges from one to five words in length. SMT systems evaluate the fluency of a sentence in the target language a few words at a time using an N-gram language model, where “N” represents the number of words that are examined together. If we have an N-gram model where N=3, when it generates a translation, the SMT model will assess the fluency by looking at n-1 previous words, or, in this case, the two previous words. What this means is that a statistical model’s fluency is limited by the “N” variable. Subsequences of “N” words will be very fluent, but the overall sentence might not be.

SMT, like NMT, converts each word into a number and performs math on these numbers. However, NMT understands the similarity of the words and assigns values like 3.16 and 3.21 for related words such as “but” and “except.” SMT numeric representations do not reflect similarities, instead assigning random numbers to similar words. Further, NMT models use recurrent neural networks. NMT evaluates the probability of words that are generated at each position related to all the previous words in that output sentence. NMT output is generally very fluent at the complete sentence level as it is not limited by N-grams and understands which words are related to each other.

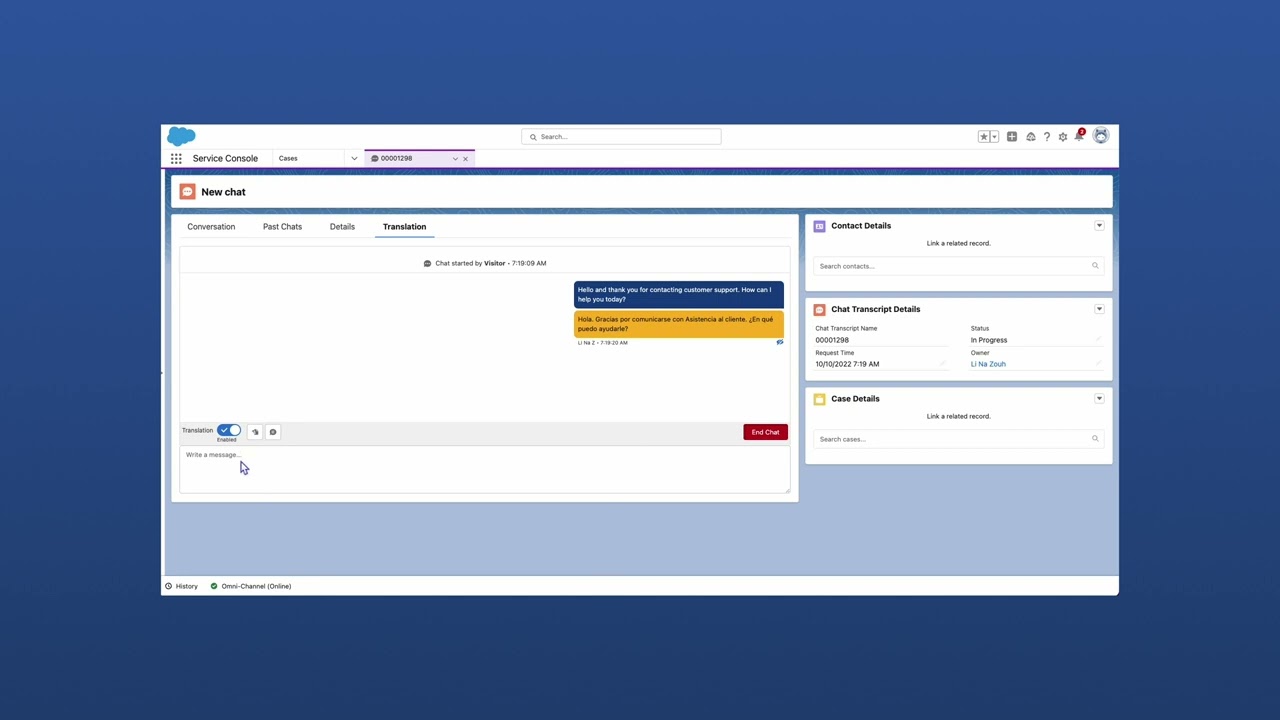

How Does Language I/O Use Neural Machine Translation?

Instead of re-inventing the MT wheel, Language I/O instead chose to build an NMT aggregation layer, meaning our platform integrates with the world’s best NMT engines. We intelligently select the best engine for each piece of content that is passed into our system for translation. In our extensive experience in this field, we have learned that some NMT vendors do a better job with a specific language or set of languages. Instead of trying to build one NMT engine that beats all of these existing vendors at all languages, we pick the best NMT engine for each language at the point in time that the translation request hits our server.

Furthermore, instead of forcing our customers to dig up thousands of human translated sentences for each language so we can formally train a single MT for each language each customer needs to support, our proprietary technology imposes preferred translations for company-specific terms on top of the engine we select. Stated another way, Language I/O uses the best existing neural machine translation engines on the market and layers its own technology on top. This layering allows us to accurately translate company and industry specific terms and messy user generated content such as slang, acronyms and abbreviations.

Interested in learning more? We’d love to chat!