CX leaders have been one of the most enthusiastic embracers of AI. The cutting-edge technology has many inventive applications in improving customer satisfaction, and we’re still uncovering potential use cases. It comes as no surprise that, according to a recent McKinsey report, customer service is the second business function (right after IT) to eagerly include AI in their day-to-day operations. AI-driven customer experience will soon be the norm, and businesses are racing to get ahead — or catch up.

That said, there’s a delicate tightrope that companies have to walk: There’s a fine line between getting customer data to build powerful experiences for them and respecting their privacy.

The tricks: Privacy concerns in AI-powered CX

AI for customer success is powered by customer data. Without customer data, you can’t build thoughtful experiences that delight them at every touchpoint.

Consumers still want good experiences: 59% of consumers are likely to switch companies to get a better digital experience. But great experience also includes impenetrable security: Half of the consumers rank personal data security as the top reason to do business with an online company. And 93% say confidence their personal data will not be compromised is most important when choosing who to transact with online (with 79% saying it’s very important).

But how can you ensure that confidential data remains secure and that your customers trust you? Here are some security risks you must first avoid.

Data collection and storage risks

Consumers want to know where their data is going. And the biggest security question with the prevalence of online AI solutions and their use in everyday work is: What are your employees putting into AI? Is it going into unauthorized channels?

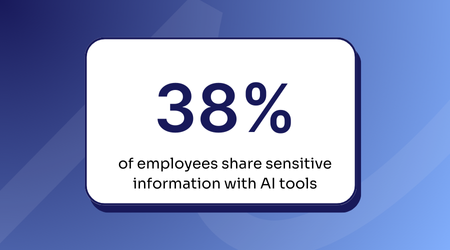

Recent data is alarming: According to the National Cybersecurity Alliance, 38% of employees share sensitive work information with AI tools without their employer’s permission.

In another survey, 22% of respondents reported using public generative AI tools at work daily. But here’s the catch: 17% believe there is value in inputting confidential company news into these tools.

And 1 in 4 employees see no issue sharing personally identifiable information such as names, email addresses and phone numbers with AI solutions.

And several AI solutions retain your customer data, presumably to train their algorithms. If your confidential information is left in a server somewhere, it’s vulnerable to a potential breach or data loss.

Bias and discrimination

AI learns from whatever data it’s fed — we’ve come a long way since Microsoft’s Tay, but we’re still not above biases.

There are several examples of brands employing AI in different capacities and seeing its racist, sexist, and homophobic tendencies emerge. Consider the sexist recruiting AI that Amazon scrapped or the AI that was biased against Black candidates in the Harvard Business Review research.

One of Language I/O’s clients, a global retail brand, prioritized ethics above all else when implementing our AI translation solution. Their primary goal was to build an inclusive experience for all their customers, and for that, they needed every part of their CX — including us, their AI translation solution — to be free of biases and prejudices. Even the most impressive cutting-edge AI was going to fall flat if it didn’t make their customers feel welcome.

Misinformation and communication gaps

Twitter’s Grok AI famously made defamatory statements against NBA star Klay Thompson, accusing him of throwing bricks through windows of multiple houses in Sacramento, CA. The reason? It “misunderstood” the phrase “shooting bricks,” which simply means missed shots in basketball.

Language is complicated, even for humans. AI is not ready to handle idioms and nuanced language patterns — not until it has been adapted for the domain, language, context, brand and more.

From threat to treat: Adopting a privacy-first approach in CX

Navigating the complexities of AI in CX requires a balanced approach that prioritizes security and ethical considerations. As organizations increasingly integrate AI into their operations, they must recognize the dual role of AI as both a powerful tool for enhancing customer interactions and a potential risk to data privacy. Plus, with the haunted house that is the rapidly evolving regulatory landscape, it’s crucial for businesses to be proactive with their approach to privacy and look ahead.

Here are some ways to rethink privacy as a treat and build a privacy-first culture in CX.

WEBINAR: Leveraging AI to expand globally while protecting your brand and customers

In a RetailWire webinar featuring experts from Language I/O and Shutterstock, we explored AI’s role in streamlining operations, enabling rapid market entry, and ensuring culturally relevant content.

Actively eliminate errors and biases

If you’re talking about the cloud, as in cloud computing, how’s your AI going to differentiate that from the clouds in the sky? That’s why any AI you use needs to be aware of the context, adapted to your domain and fed with a glossary of your brand-specific terminology, so it can always consistently and reliably return accurate responses.

Any organization committed to unbiased, inclusive AI must invest in diverse teams and push the limits of AI in unexpected directions. This ensures that your AI is free of biases and errors.

Use data encryption

To protect yourself from cyberattacks and weak links in your security ecosystem, opt for data encryption. Language I/O, for example, automatically detects and masks personally identifiable information (PII) even before translation.

Embrace zero data retention

Another must-have is zero data retention. Language I/O always scrubs all its processors free of customer conversations as soon as the translation is complete. This is non-negotiable even for partners and vendors we work with.

The modern consumer is acutely aware of data privacy issues, and their trust hinges on an organization’s commitment to safeguarding personal information. That’s why companies must prioritize transparent communication about data usage and security measures to foster this trust. No one within your privacy ecosystem should be exempt from thorough evaluation, so you can stay on top of and prevent any threats from within or outside your organization.

Let’s continue this conversation.

- Join the #SecureAF movement (and we’ll send you a cool hat!)

- Discover the 10 questions you must ask every potential vendor as you assess them for security.

- Learn more about Language I/O’s commitment to industry-leading security.